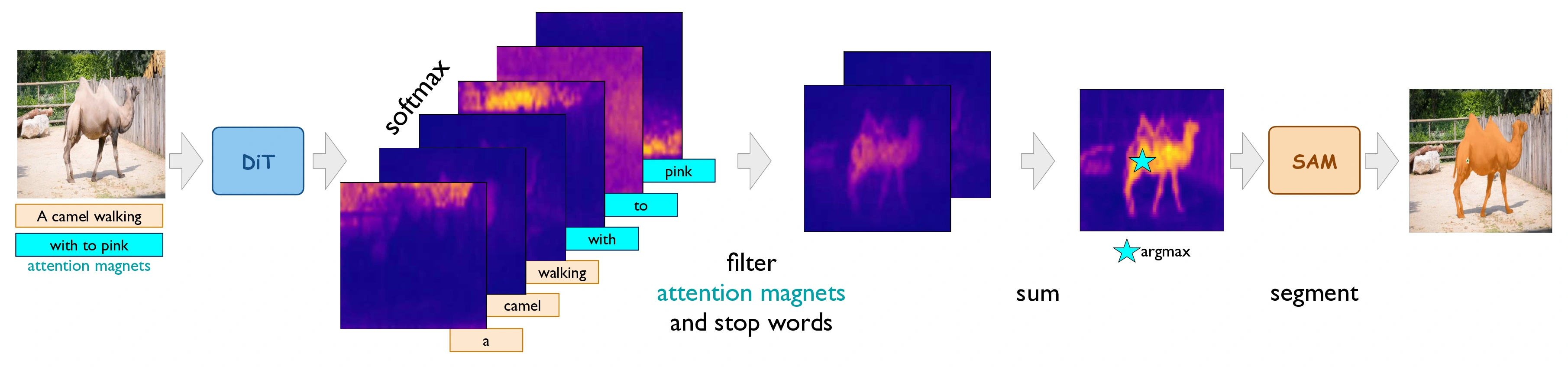

Method Overview

Most existing approaches to referring segmentation achieve strong performance only through fine-tuning or by composing multiple pre-trained models, often at the cost of additional training and architectural modifications. Meanwhile, large-scale generative diffusion models encode rich semantic information, making them attractive as general-purpose feature extractors. In this work, we introduce a new method that directly exploits features, attention scores, from diffusion transformers for downstream tasks, requiring neither architectural modifications nor additional training. To systematically evaluate these features, we extend benchmarks with vision–language grounding tasks spanning both images and videos. Our key insight is that stop words act as attention magnets: they accumulate surplus attention and can be filtered to reduce noise. Moreover, we identify global attention sinks (GAS) emerging in deeper layers and show that they can be safely suppressed or redirected onto auxiliary tokens, leading to sharper and more accurate grounding maps. We further propose an attention redistribution strategy, where appended stop words partition background activations into smaller clusters, yielding sharper and more localized heatmaps. Building on these findings, we develop RefAM, a simple training-free grounding framework that combines cross-attention maps, GAS handling, and redistribution. Across zero-shot referring image and video segmentation benchmarks, our approach consistently outperforms prior methods, establishing a new state of the art without fine-tuning or additional components.

The Challenge: Referring segmentation - the task of localizing objects based on natural language descriptions - typically requires extensive fine-tuning or complex multi-model architectures. While diffusion transformers (DiTs) encode rich semantic information, directly leveraging their attention mechanisms for grounding tasks has remained largely unexplored.

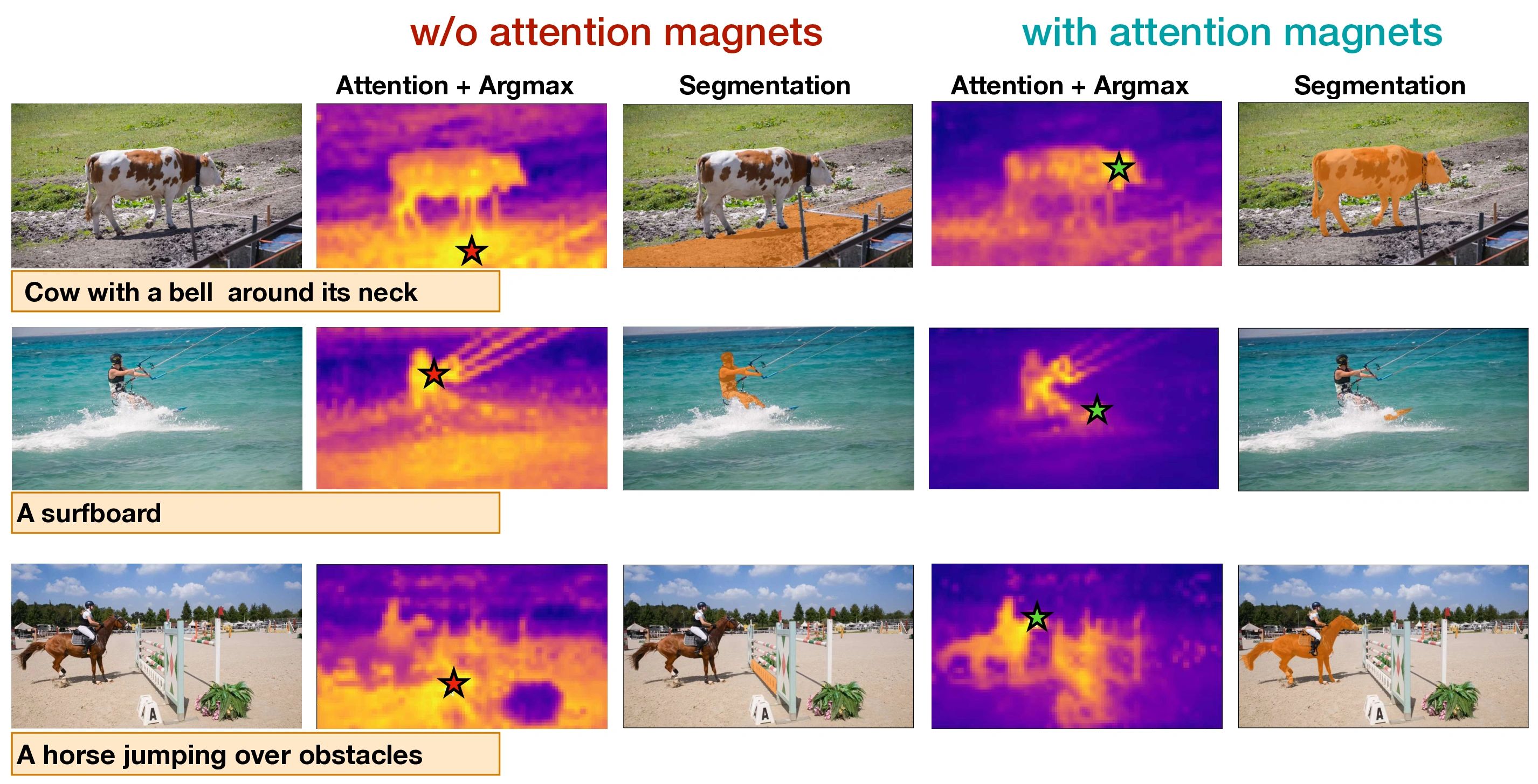

The Discovery: We uncover that diffusion transformers exhibit attention sink behaviors similar to large language models, where stop words accumulate disproportionately high attention despite lacking semantic value. This creates both challenges and opportunities for vision-language grounding.

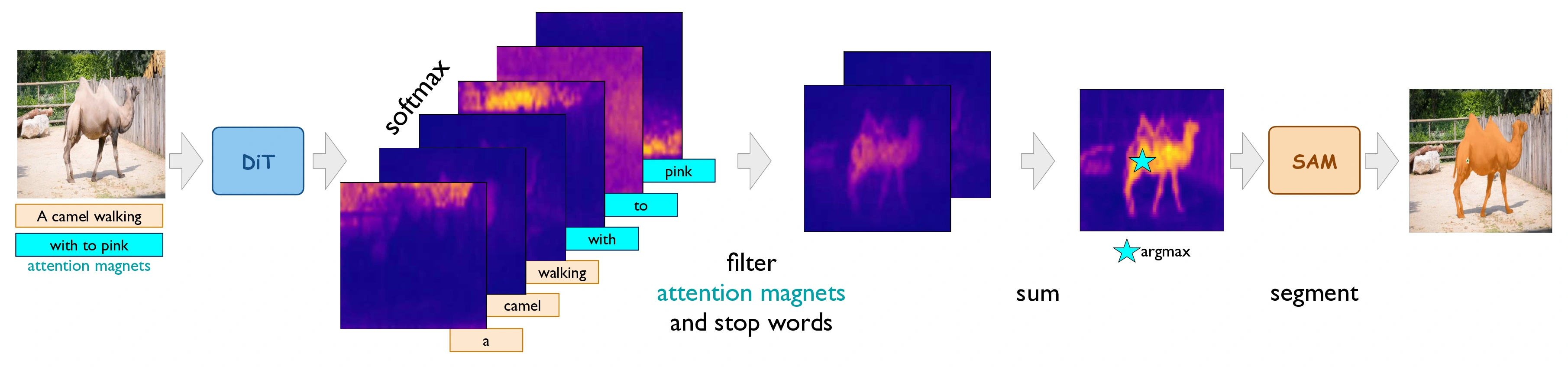

Our Solution: RefAM exploits stop words as "attention magnets" - deliberately adding them to referring expressions to absorb surplus attention, then filtering them out to obtain cleaner attention maps. This simple strategy achieves state-of-the-art zero-shot referring segmentation without any fine-tuning or architectural modifications.

We identify and analyze global attention sinks (GAS) in diffusion transformers that act as attention magnets, linking their emergence to semantic structure and showing they can be safely filtered.

We introduce a novel strategy where strategically added stop words act as attention magnets, absorbing surplus attention to enable cleaner cross-attention maps for better grounding.

RefAM combines cross-attention extraction, GAS filtering, and attention redistribution into a simple framework that requires no fine-tuning or architectural modifications.

We achieve new state-of-the-art performance on referring image and video segmentation benchmarks, significantly outperforming prior training-free methods.

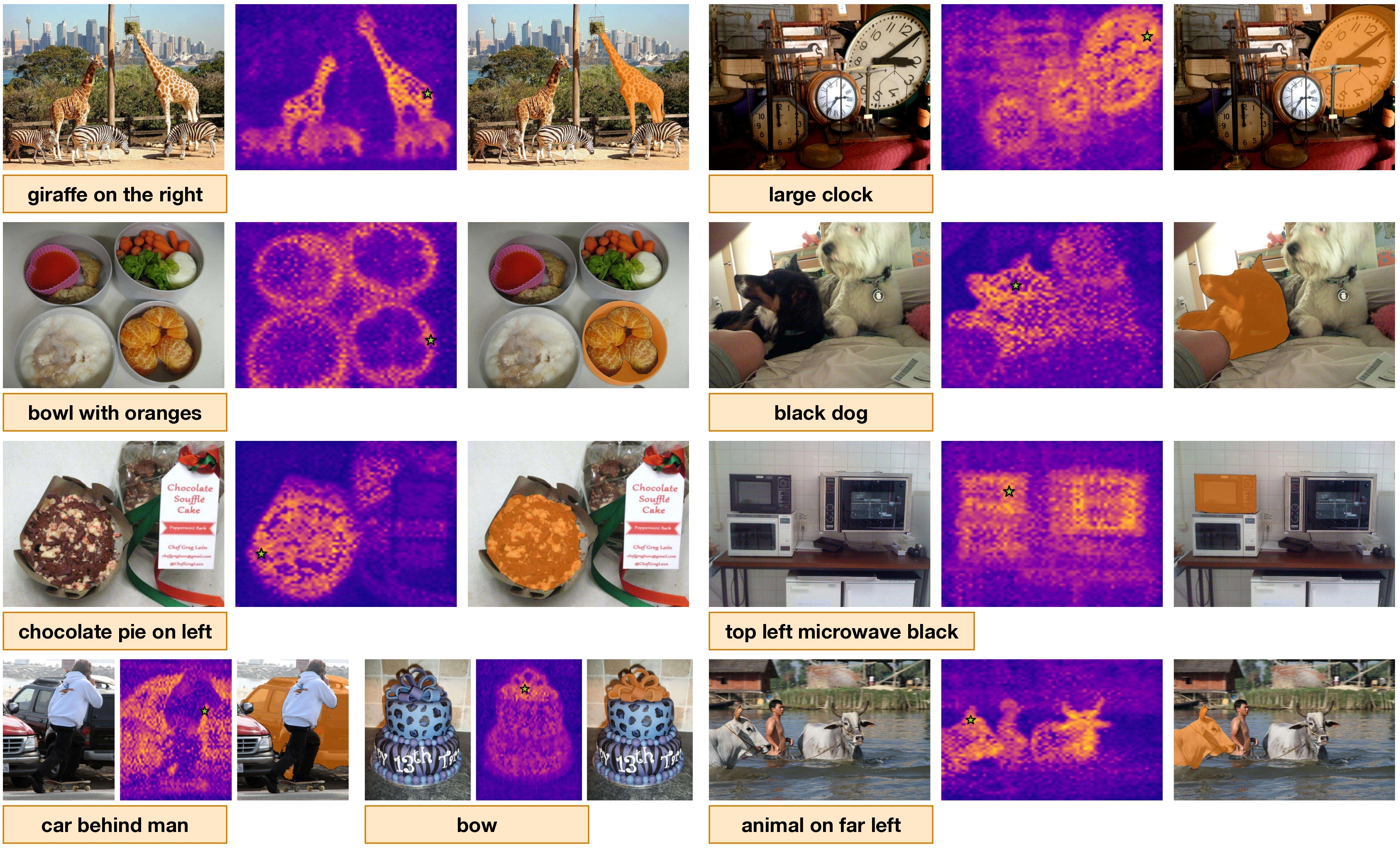

A key discovery in our work is that stop words (e.g., "the", "a", "of") act as attention magnets in cross-attention maps, absorbing significant attention scores and creating noisy backgrounds that hurt segmentation quality. We exploit this phenomenon by strategically adding extra stop words to referral expressions, which further concentrate the attention pollution, and then filtering out all stop words to obtain cleaner attention maps. This simple yet effective technique dramatically improves the quality of attention-based segmentation across both image and video domains, leading to more precise object localization.

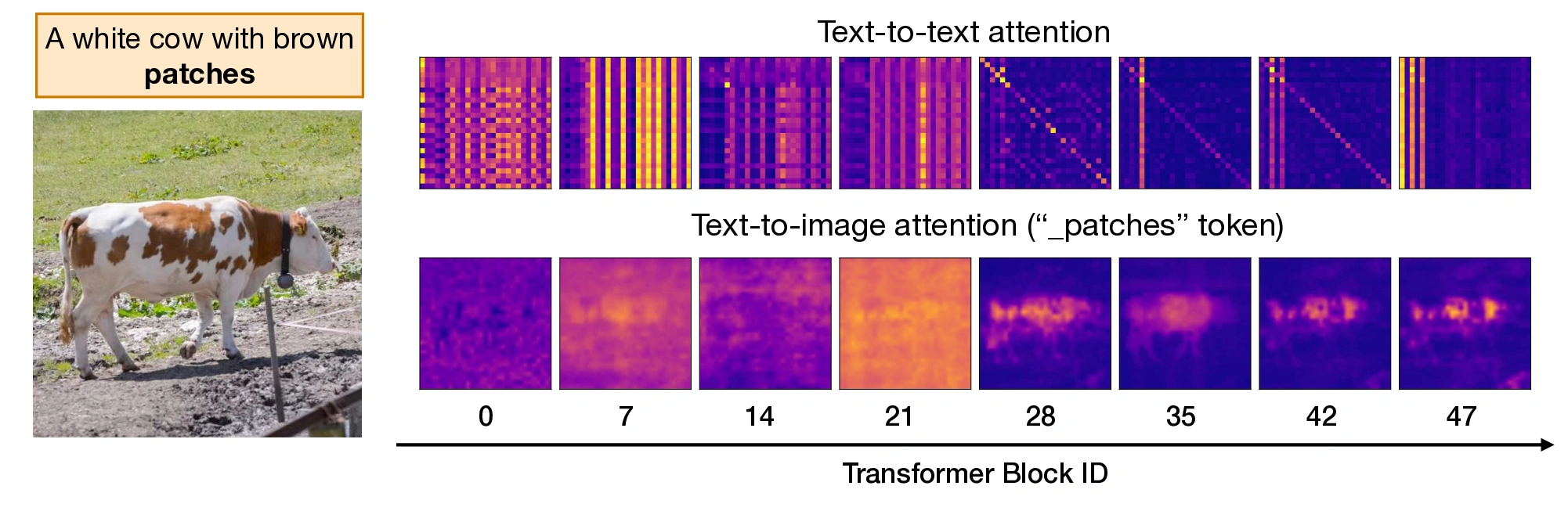

We discover that diffusion transformers exhibit attention sink behaviors similar to large language models. Specifically, we identify Global Attention Sinks (GAS) - tokens that attract disproportionately high and nearly uniform attention across all text and image tokens simultaneously. These sinks emerge consistently in deeper layers but are absent in early layers, serving as indicators of semantic structure. While uninformative themselves, they can suppress useful signals when they occur on meaningful tokens, which we address through strategic filtering and redistribution.

Key Findings: Our analysis reveals that GAS tokens emerge progressively with network depth, transitioning from diffuse attention patterns in early layers to structured, semantically meaningful distributions in deeper layers. This emergence correlates with the development of semantic understanding, providing insights into how diffusion transformers build hierarchical representations. By identifying and strategically filtering these attention sinks, we can redirect attention toward semantically relevant regions, leading to more accurate grounding and segmentation results.

RefAM achieves state-of-the-art zero-shot referring image segmentation on RefCOCO/RefCOCO+/RefCOCOg datasets. Our attention magnet strategy proves crucial - by strategically adding stop words to absorb surplus attention and then filtering them out, we obtain cleaner attention maps focused on the target object. This simple technique dramatically improves localization precision without requiring any fine-tuning or architectural modifications. RefAM significantly outperforms previous training-free methods while maintaining simplicity.

| Method | RefCOCO (oIoU) | RefCOCO+ (oIoU) | RefCOCOg (oIoU) | |||||

|---|---|---|---|---|---|---|---|---|

| val | testA | testB | val | testA | testB | val | test | |

| Zero-shot methods w/o additional training | ||||||||

| Grad-CAM | 23.44 | 23.91 | 21.60 | 26.67 | 27.20 | 24.84 | 23.00 | 23.91 |

| Global-Local | 24.55 | 26.00 | 21.03 | 26.62 | 29.99 | 22.23 | 28.92 | 30.48 |

| Global-Local | 21.71 | 24.48 | 20.51 | 23.70 | 28.12 | 21.86 | 26.57 | 28.21 |

| Ref-Diff | 35.16 | 37.44 | 34.50 | 35.56 | 38.66 | 31.40 | 38.62 | 37.50 |

| TAS | 29.53 | 30.26 | 28.24 | 33.21 | 38.77 | 28.01 | 35.84 | 36.16 |

| HybridGL | 41.81 | 44.52 | 38.50 | 35.74 | 41.43 | 30.90 | 42.47 | 42.97 |

| RefAM (ours) | 46.91 | 52.30 | 43.88 | 38.57 | 42.66 | 34.90 | 45.53 | 44.45 |

Bold = best, underlined = second-best among training-free methods.

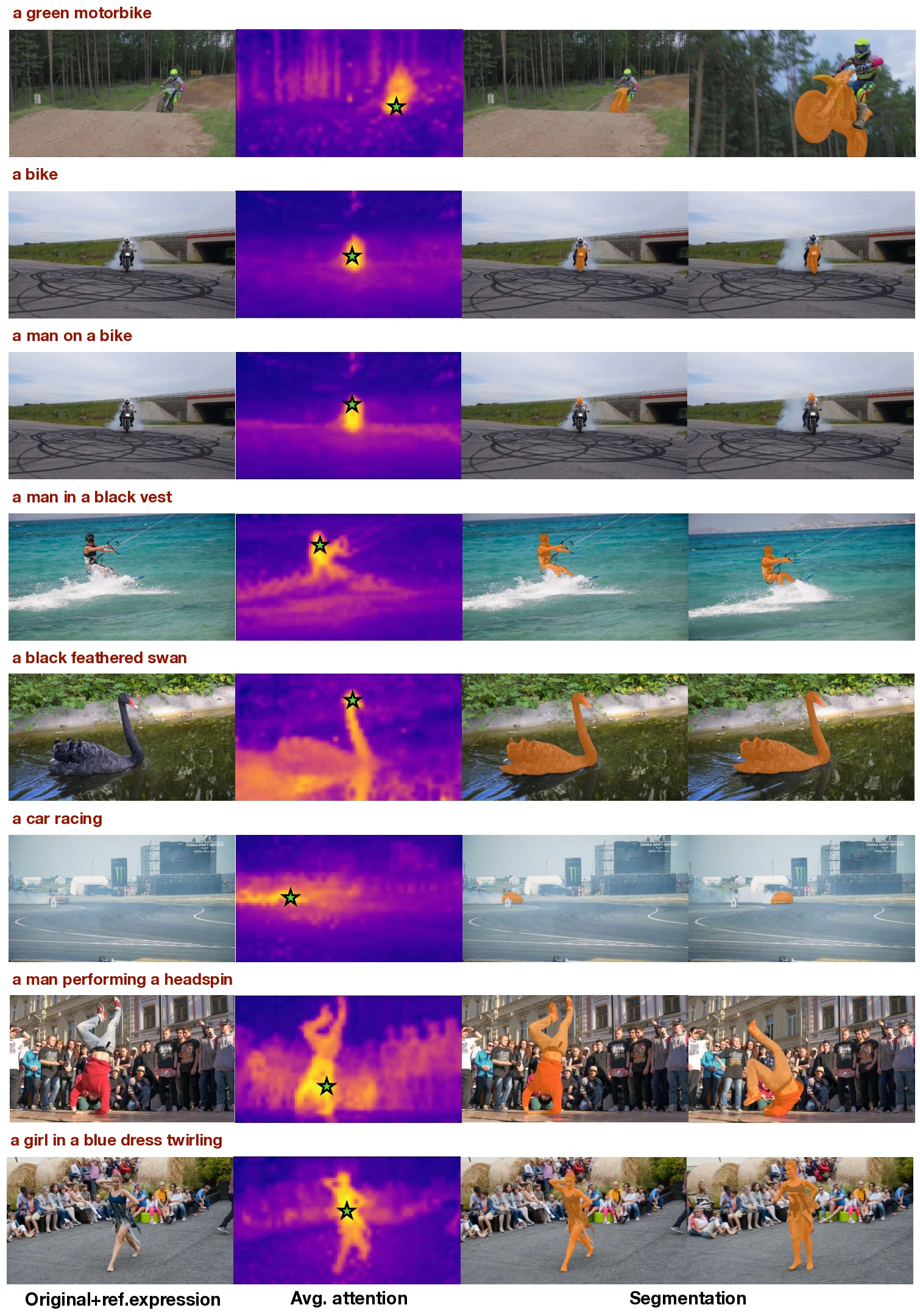

RefAM extends seamlessly to video referring segmentation tasks using Mochi, a video diffusion transformer. We extract cross-attention maps from the first frame with our attention magnet strategy and leverage SAM2's temporal propagation for consistent video segmentation. The attention magnet filtering proves even more crucial in video contexts where temporal consistency can amplify attention noise. RefAM achieves substantial improvements over existing training-free methods, establishing new benchmarks for zero-shot video referral segmentation.

| Method | J&F | J | F |

|---|---|---|---|

| Training-Free with Grounded-SAM | |||

| Grounded-SAM | 65.2 | 62.3 | 68.0 |

| Grounded-SAM2 | 66.2 | 62.6 | 69.7 |

| AL-Ref-SAM2 | 74.2 | 70.4 | 78.0 |

| Training-Free | |||

| G-L + SAM2 | 40.6 | 37.6 | 43.6 |

| G-L (SAM) + SAM2 | 46.9 | 44.0 | 49.7 |

| RefAM + SAM2 (ours) | 57.6 | 54.5 | 60.6 |

| AM | NP | SB | J&F | J | F | PA |

|---|---|---|---|---|---|---|

| ✓ | ✓ | ✓ | 57.6 | 54.5 | 60.6 | 68.9 |

| - | ✓ | ✓ | 54.4 | 50.9 | 57.6 | 59.8 |

| ✓ | ✓ | - | 55.1 | 52.2 | 58.0 | 67.2 |

| - | ✓ | - | 53.1 | 49.5 | 56.7 | 60.2 |

| - | - | - | 50.0 | 46.8 | 53.2 | 52.5 |

AM = attention magnets, NP = noun phrase filtering, SB = spatial bias, PA = point accuracy

RefAM provides a powerful and accessible tool for zero-shot referring segmentation, enabling advances in applications such as medical image analysis, robotics, autonomous systems, and assistive technologies for people with visual impairments. The attention magnet approach democratizes access to high-quality referring segmentation capabilities without requiring specialized training or fine-tuning.

By providing training-free, zero-shot methods that work across different domains (images and videos), RefAM is particularly valuable for researchers and practitioners who may not have access to large computational resources or extensive labeled datasets. The simplicity of the approach makes it broadly applicable and easy to integrate into existing systems.

However, as with any advancement in computer vision and AI, there are potential ethical considerations. Improved referring segmentation capabilities could be misused for surveillance or privacy violation purposes. We emphasize the importance of deploying these technologies responsibly, with appropriate safeguards and consideration for privacy rights. We encourage the research community to continue developing ethical guidelines for the deployment of vision-language technologies and to consider the broader societal implications of these advancements.

@article{kukleva2025refam,

title={RefAM: Attention Magnets for Zero-Shot Referral Segmentation},

author={Kukleva, Anna and Simsar, Enis and Tonioni, Alessio and Naeem, Muhammad Ferjad and Tombari, Federico and Lenssen, Jan Eric and Schiele, Bernt},

journal={arXiv preprint arXiv:2509.22650},

year={2025}

}